A Bad Year for Security Incidents

I was recently asked by an executive if there wasn’t a component of urban myth to all this recent emphasis on CyberCriminals (crackers, script kiddies, virus writers and the like). Were there really that many attacks on systems? Are viruses really the problem the anti-virus vendors make them out to be? Are security breaches really costing business the millions of dollars reported?

These are all good questions. Of course we shouldn’t take all the hype and hysteria on faith. There are many in the industry for whom crying wolf is self-serving. Nevertheless, there are many sources of somewhat objective information on security breaches.

One of the most difficult aspects of CyberCrime to pin down is the amount of actual damages. You’ll find estimates all over the map, from the FBI’s estimate that computer losses are up to $10 billion a year to Computer Economics’estimate that the worldwide impact of malicious code was $13.2 billion in 2001. Computer Economics stated that the biggest losses were caused by SirCam ($1.15 billion), Code Red (all variants $2.62 billion), and NIMDA ($635 million).

Estimates are fine, but published reports of actual losses are better. However, most corporations would rather be summoned before Congress than admit to a security problem. Of course, if they can use a security breach to justify bad fiscal performance, like CryptoLogic did, that’s another story. CryptoLogic, a Canadian maker of gambling software, reported a 10 percent drop in fourth-quarter revenue primarily due to a charge taken as the result of a security breach.

So where are these threats coming from? Most people point to CyberCriminals on the Internet, but they may be only a small part of the problem. The FBI and the Computer Security Institute performed a survey on CyberCrime and found that 81 percent of corporate respondents said the most likely source of attack was from inside the company. This confirms the conventional wisdom among security administrators that the biggest problem is your own employees or contractors. And according to an @stake Security research report entitled The Injustice of Insecure Software, 30 percent to 50 percent of the digital risks facing IT infrastructures are due to flaws in commercial and custom software. According to CERT®, security vulnerabilities more than doubled in the last year, from 1,090 holes in 2000, to 2,437 reported in 2001. Likewise, the number of reported incidents also drastically increased from 21,756 documented in 2000 to 52,658 in 2002.

This year is very likely to be worse, according to SecurityFocus co-founder and CEO Arthur Wong, who spoke recently at RSA Conference 2002. According to Wong, around 30 new software vulnerabilities were discovered each week In 2001, and this represented a decrease in the trend that produced a doubling of new vulnerabilities each year for much of the late ’90s. He expects 2002 to bring a return to old growth rates, and predicted that 50 new software security holes will be found each week in the coming year.

Michael Vatis, the former director of the National Infrastructure Protection Center (NIPC) agrees, saying, “The rate of growth of our vulnerabilities is exceeding the rate of improvements in security measures.” He’s most worried about CyberAttacks that could bring down ATMs, power grids and public transportation systems.

If you’d like to get a near real-time picture of attacks worldwide, check out SecurityFocus’ ARIS Predictor. This service shows the actual number of incidents worldwide based on a sample of installations that contribute log information.

Against this rising tide of attack reports is a contrary stat: Security breaches and hacking attacks have actually decreased since the September 11 terrorist attacks, according to the Federal Computer Incident Response Center (FedCIRC). FedCIRC shows just 15 incidents of intruder activity reported in December 2001, less than a third of that recorded in December 2000.

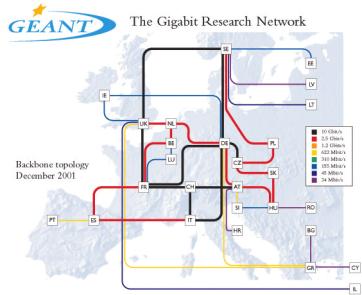

Where are all these attacks coming from? It turns out Europe is a virus hotbed, according to a report from mi2g’s Intelligence Unit. The Continent accounts for 57 percent of the world’s malicious code writing activity, with 21 percent originating from Eastern Europe, including Russia. While conventional wisdom may tell us otherwise, North America only accounts for 17 percent of viruses developed, and the Far East only 13 percent. The most prolific virus writers, according to the report, are Zombie, author of the Executable Trash Virus Generator; Benny from 29A virus group and author of the .Net Donut virus; Black Baron, author of Smeg; David Smith, author of Melissa; and Chen Ing-Hau, author of CIH.

So the solution for businesses is to stay alert, and stay patched. Make sure you’re always running the latest antivirus software and the latest patches on your operating systems and applications. However, Alan Paller, director of Research at the SANS Institute, said, “There are certain attacks that nobody can block. . . . If your people aren’t absolutely, all the time on the latest patches, you’re going to get hit.”

So hey, hey, hey! Let’s be careful out there! If you’re in the Twin Cities on March 12, be sure to attend the CyberCrime Fighter Forum 2002 and learn more about how you can be safe.

Briefly Noted

- Shameless Self-Promotion Dept.: Did I mention CyberCrime Fighter Forum 2002? Also, in conjunction with the new CTOMentor paper, Basic Home Networking Security, we’re running a survey on home networking policies and procedures. The first survey cycle closed yesterday, but you can get in on thesecond, which will run through March 11.CTOMentor is also offering a two-part white paper on peer-to-peer technology: Peer-to-Peer Computing and Business Networks: More Than Meets the Ear. Part 1, What is P2P?, is available for free on the CTOMentor Web site. Part 2, How Are Businesses Using P2P?, is available for $50.

CTOMentor

- International Reach: A note from a reader in Guam prompted me to check out the subscription list and see where in the world SNS is going. There are subscribers in Australia, Canada, Germany, Greece, Guam, India, Italy, Japan, and the UK. Besides noting the obvious country suffixes on some of the email addresses, I used a cool tool called VisualRoute to determine subscriber’s location. Alert SNS Reader Bob Burkhart let me know about this program. You type in an URL or an email address, and it shows you all the network hops between your computer and the target. That’s not spectacular, but what is nice is VisualRoute looks up the DNS records on the final computer and pulls out any location information, which it reports to you.The bad thing about this software, which is free for trial use, is it doesn’t clean up after itself completely when you exit it. On a Windows 2000 machine it left MsPMSPSv.exe (the Microsoft Digital Rights Manager) and wjview.exe(Microsoft VM Command Line Interpreter) running after it exited. I recommend using your software firewall (What’s that? You don’t have a software firewall? Get one! And read the new CTOMentor paper on home network security ) to only grant one time access to the Internet for the various programs VisualRoute uses (including vrping1.exe, and vrdns2.exe) just to be safe.

VisualWare

- Microsoft As Security Threat: I missed this item from the irreverent UK site, The Register, back in December. They pull no punches in describing Microsoft as a bigger threat to security than Osama Bin Laden. Read the article and see if you agree.

The Register

- MessageLabs Says Viruses on the Increase: Message Labs, which sells a hosted antivirus service for email, reported that it detected one virus per 370 emails in 2001, compared to one in 700 in 2000 and one in 1400 in 1999. The 2001 total of 1,628,750 infected emails that MessageLabs detected broke out this way:

- More than 500,000 were infected with the SirCam.A virus

- 258,242 with BadTrans.B

- 152,102 with Magistr.A

- 136,585 with Goner.A

- 90,473 with Hybris.B.

the

the

own problems. The company’s sales target is 150,000 3G users by March, but it only sold 11,000 3G handsets in October, when its 3G service, FOMA, went live with regional coverage. DoCoMo launched a popular trial video service, imotion, in November that will run through March. But just a week into the trial, the company had to recall 1,500 NEC N2002 handsets due to a software problem. The glitch destroyed users’ e-mails, content based on Java, call records and some of the handset’s personalized settings.

own problems. The company’s sales target is 150,000 3G users by March, but it only sold 11,000 3G handsets in October, when its 3G service, FOMA, went live with regional coverage. DoCoMo launched a popular trial video service, imotion, in November that will run through March. But just a week into the trial, the company had to recall 1,500 NEC N2002 handsets due to a software problem. The glitch destroyed users’ e-mails, content based on Java, call records and some of the handset’s personalized settings.